Operational Readiness is a well-known discipline. Activities such as training, table-tops, rehearsals and simulations all contribute to a team prepared for day 1 of operations.

But how do we measure readiness? How do we know if we are 100% ready, or maybe only 85% ready? How is that broken down across the services, facilities, or teams involved?

The starting point is to identify what we need to be ready for. What is the target in terms of services, facilities/venues, processes and teams? For example, in major events, readiness for a test event is a very different goal than final event readiness. The latter requires all services and venues to be ready, while the former requires a subset only. Similarly, at an enterprise level, critical services are typically released in phases. Each operational readiness testing period should have a well-defined scope to be operated and tested, which can be captured in a corresponding strategy or plan.

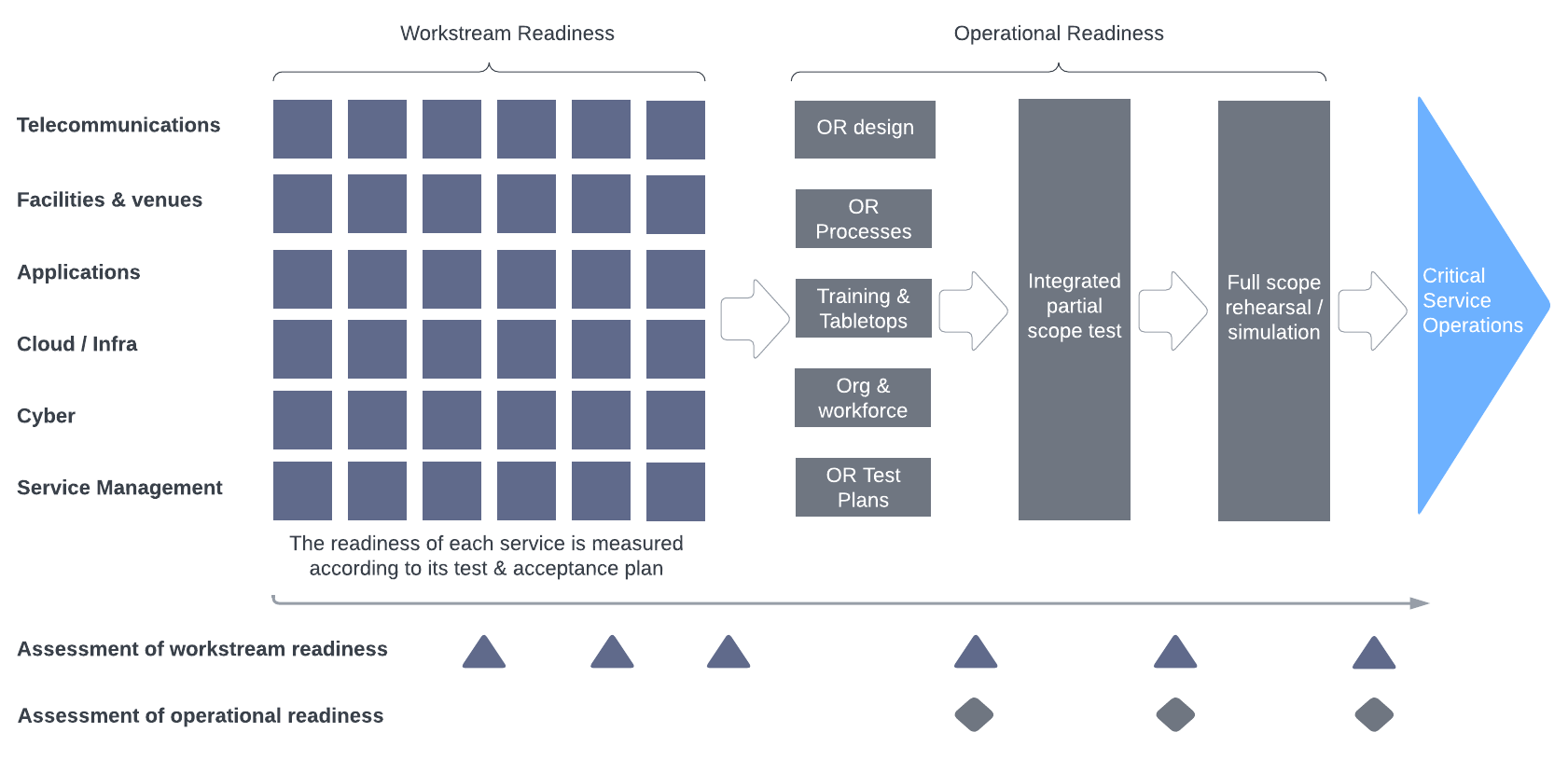

There are two key dimensions of readiness. The first is workstream readiness, where each workstream measures its level of implementation of services, facilities and infrastructure against a defined set of acceptance criteria in both quantitative and qualitative terms. The second is integrated operational readiness, a set of cross-functional activities that primarily test the capability of people to operate the services, meet the target SLAs, manage incidents and communicate effectively. For example, a technology readiness programme for critical service operations may look something like the following:

The readiness of each service is measured according to the implementation and testing plans for that workstream. The quantitative measures could include % of available services vs plan, % of equipment operational vs plan, venue or facility readiness, % of processes defined and people trained. Each of these can be measured in their own way and tracked at regular intervals. This measures the level of implementation of each service within each workstream.

It can be beneficial to also have a qualitative measurement, where the responsible service owner assesses the level of readiness for the upcoming activity compared to the plan of what should be delivered. For example, the following criteria could be used as a self-assessment:

That assessment by the responsible service owner could be based on a combination of:

The result is an ongoing workstream readiness assessment tracker which combines implementation progress and readiness for the next test phase as shown below.

The scope of operational readiness activities includes activities like preparing procedures, performing training, holding tabletop sessions, resource planning and rostering, mobilisation and performing rehearsals and simulations. More details can be found here.

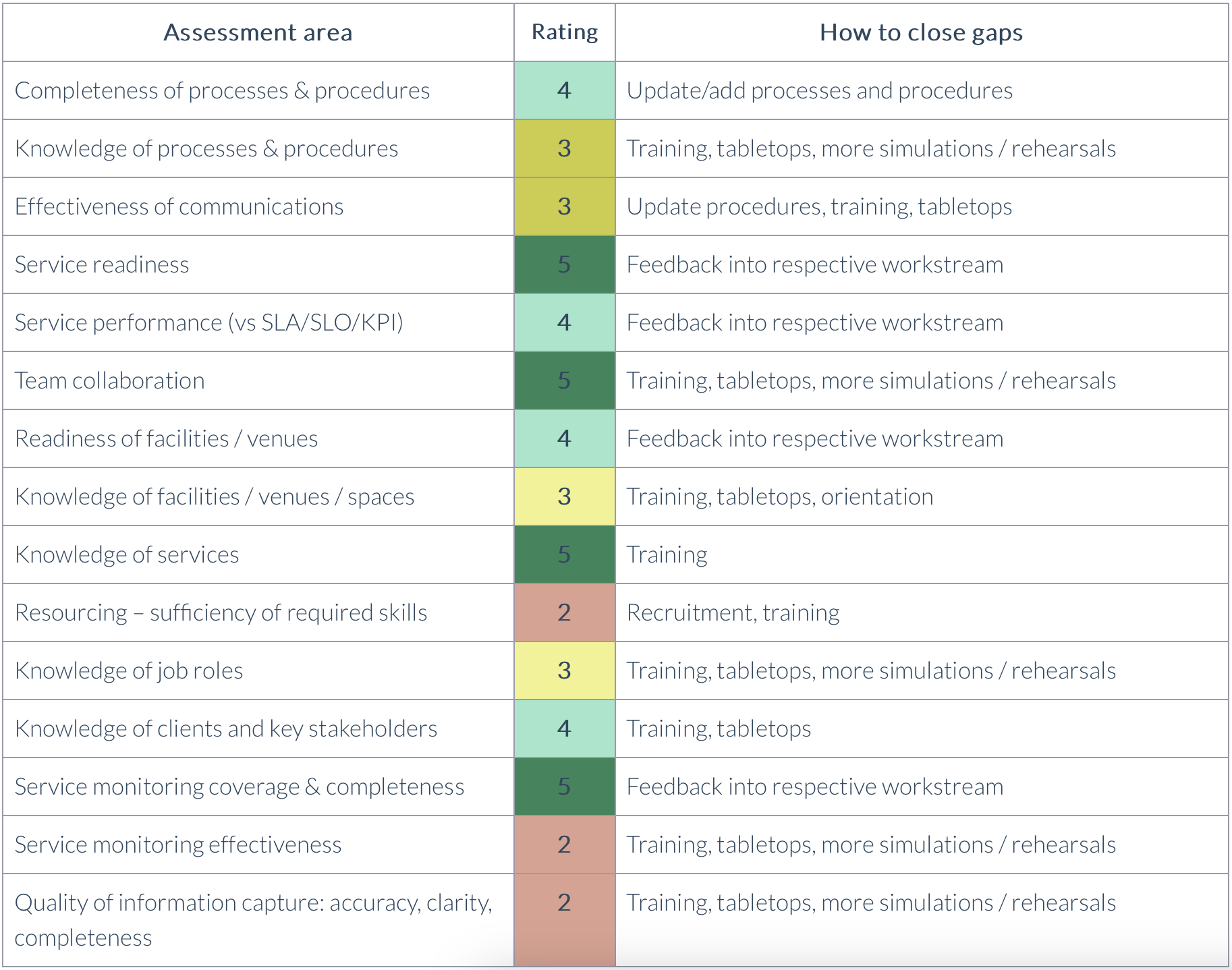

In contrast to measuring workstream readiness, the assessment of operational readiness is best performed by independent observers who are not responsible for delivering the services.

A simple assessment of operational readiness could take the form of a post-testing debrief, where issues are identified compared to the goals of the exercise along with lessons learned. In general, the goals are to meet the defined service performance criteria (e.g. SLAs). But there are more granular objectives such as knowledge of procedures, knowledge of technologies and systems, quality of communication channels or ability to adapt to unplanned circumstances that all contribute to improved performance.

Applying a bit more systematic approach as outlined below can help better assess the level of readiness consistently, across multiple services, teams and locations. It enables improvement actions to be targeted where they are most needed and ensures that nothing is missed in closing the readiness gaps.

When launching critical service operations, the visibility from customers and key stakeholders demands seamless performance. Part of that is designing and building the services in a robust way that meets performance expectations. But inevitably issues, minor or major, will occur and the speed and effectiveness of their resolution depends on a well-trained and coordinated team to minimize any visible impacts. Measuring readiness in a systematic way ensures that the team can approach the launch of critical operations with confidence that they have been pressure tested and are ready to deal with any situations that may arise.